Image Classification

1. Image Classification is the task of using computer vision and machine learning algorithms to extract meaning from an image. It is the task of assigning a label to an image from a predefined set of categories.

2. Semantic Gap is the difference between how a human perceives the contents of an image versus how an image can be represented in a way a computer can understand the process.

3. Feature Extraction is the process of taking an input image, applying an algorithm, and obtaining a feature vector that quantifies the image.

4. The common supervised learning algorithms include Logistic Regression, Support Vector Machines, Random Forests, and Artificial Neural Networks.

5. Unsupervised Learning which is also called self-taught learning has no labels associated with the input data and thus we cannot correct our model if it makes an incorrect prediction.

6. K-Nearest Neighbor classifier doesn’t actually learn anything, but it directly relies on the distance between feature vectors.

7. A learning model that summarizes data with a set of parameters of fixed size which is independent of the number of training examples is called a Parametric Model. No matter how much data you throw at the parametric model, it won’t change its mind about how many parameters it needs. – Russell and Norvig.

8. Parameterization is the process of defining the necessary parameters of a given model. In machine learning, parameterization involves in four key components: data, a scoring function, a loss function, and weights and biases.

9. The scoring function accepts the data as an input and maps the data to class labels. A loss function quantifies how well our predicted class labels agree with our ground-truth labels.

10. Softmax Classifiers give probabilities for each class label while hinge loss gives the margin scores.

11. The gradient descent method is an iterative optimization algorithm that operates over a loss landscape also called and optimization surface. Also, gradient descent refers to the process of attempting to optimize the parameters for low loss and high classification accuracy via an iterative process of taking a step in the direction that minimize loss.

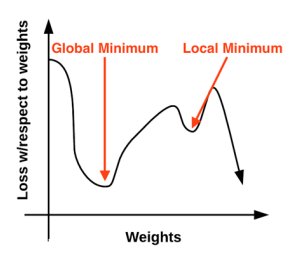

Figure: The naive loss visualized as a 2D plot.

As shown in figure, the loss landscape has many peaks and valleys. Each peak is a local maximum that represents very high regions of loss. The local maximum with largest loss across the entire loss landscape is the global maximum. Similarly, the local minimum represents many small regions of loss. The local minimum with the smallest loss across the loss landscape is the global minimum.

12. An optimization algorithm may not be guaranteed to arrive at even a local minimum in a reasonable amount of time, but it often finds a very low value of the loss function quickly enough to be useful. – Goodfellow.

13. Stochastic Gradient Descent (SGD) is a simple modification to the standard gradient descent algorithm that computes the gradient and updates the weight matrix on small batches of training data, rather than the entire training set.

14. Momentum is a method used to accelerate Stochastic Gradient Descent (SGD), enabling it to learn faster by focusing on dimensions whose gradient point in the same direction. Nesterov’s Acceleration can be conceptualized as a corrective update to the momentum which lets us obtain an approximate idea of where our parameters will be after the update.

13. Many strategies used in machine learning are explicitly designed to reduce the test error, possibly at the expense of increased training error. These strategies are collectively known as Regularization. – Goodfellow.

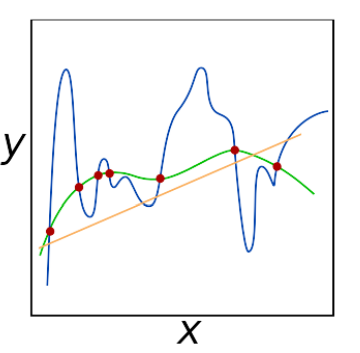

Figure: An example of model fitting.

The figure presents an example of underfitting (orange line), overfitting (blue line), and generalizing (green line). The goal of deep learning classifiers is to obtain these types of “green functions” that fit the training data nicely, but avoid overfitting. Regularization helps to obtain the desired fit.